The new machines making waves in audio

The creative world of sound, music, design and voice-over is at the forefront of an AI-led tech revolution that is poised to lend a hand – or to take the hand that feeds it and replace it completely. Tim Cumming talks to the sound creatives wrapping their ears around the unfolding possibilities and possible pitfalls.

The sound of recorded sound is changing. Of course, it has been since we began recording what we heard – 9 April 1860 was the first time, a voice singing Au Clair de la Lune (By the Light of the Moon) into the ‘phonautograph’ of Édouard-Léon Scott de Martinville, presaging Edison’s phonograph by a decade.

Recorded sound has been a harbinger at the forefront of socio-cultural change ever since – from the first shellac 78 records through the first long-players and singles of the post-war rock and pop boom, the arrival of the synthesiser, the CD, then the MP3, and to the point now where an AI programme named Suno can create songs in a couple of seconds, guided by a simple prompt.

A friend calls AI ‘anthropological indexing’. It’s taking the sum of documented human existence and playing it back to us. It is a master mimic.

Sound surrounds us, and when sound changes, the culture changes with it. AI’s simulations raise troubling questions as to whether future recordings will be a trustworthy record of what happened, what was said. They also raise troublingly exciting capabilities, innovations and extrapolations that many are embracing as a step change in the worlds of branding and creativity.

AI takes the sum of documented human existence and plays it back to us. It is a master mimic.

That long-dead voice from 1860 singing Au Clair de la Lune was finally heard in 2008 thanks to some clever digital software that converted the lines made by the phonautograph in a coating of soot on paper into a sound file.

That was 2008. This is 2024. Everything’s different. “I was at a party with a friend who’d been made redundant and was upset, so I got her to tell me about it and I put it into a song – a really good diss East Coast hip hop track. The voices are pretty amazing.” So says Anton Christodoulou, Group Chief Technology Officer at London’s Imagination. He’s talking about Suno, the AI ‘building a future where anyone can make great music’.

You could train an AI on your own voice, to become that invaluable assistant who speaks exactly as you do.

It’s one of AI’s game-changers – one of many over the past 18 months or so – and a potential P45 for human music-makers everywhere. Or is it? “Once you’ve used it a lot,” says Christodoulou, “there’s definite a lack of humanness. If you sing a song that’s emotional and close to your heart, that comes through in the way you breathe, the way you push your voice, the intonation of what you’re singing.”

In other words, authentic emotional tells that may be just a few digital tweaks away. “In theory, it should be able to find the same emotional high and low points,” agrees Christodoulou, “even though it has no idea it’s doing that. It’s blind. A friend calls AI ‘anthropological indexing’. It’s taking the sum of documented human existence and playing it back to us. It is a master mimic.”

Credits

powered by

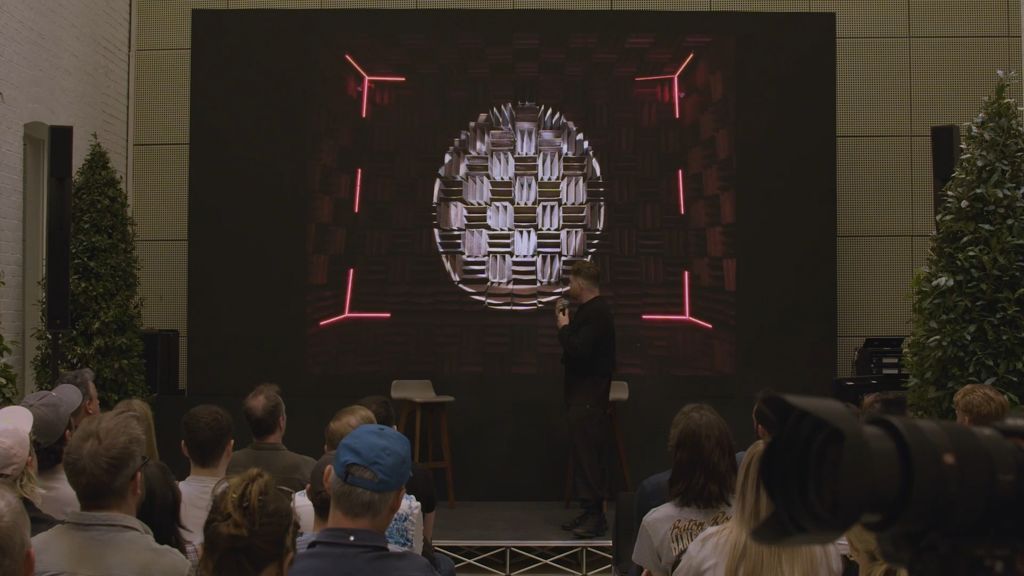

Above: Director and vocalist Harry Yeff, aka REEPS100, gives an AI-powered performance and talk for Imagination Labs.

We don’t tend to turn to mimics for innovative solutions, and innovation still seems a uniquely human trait. And that comes in remarkable, unexpected ways. Earlier this year, Imagination hosted a talk and performance by neurodivergent director and vocalist Reeps100. “Harry trained an AI model using his vocal performances to play his own voice back to him. Then he used what the AI came back with, which was, in many cases, completely new ways of doing things, to train his voice to do what the AI was doing.”

Such innovative feedback loops between human creative and sandboxed AI are a form of ‘adversarial learning’. “This idea of AI being used go explore new territory or play back stuff you already know in new ways so that you can open up new pathways in your brain is super interesting,” says Christodoulou.

Are artists literally losing their voices if they give away one of their most powerful assets?

Singers such as Grace Jones are already training AI on their vocals for future acts of self-collaboration. In the same way, you could train an AI on your own voice, to become that invaluable assistant who speaks exactly as you do. If only most of us didn’t hate the sound of our own voice.

“Probably the most interesting voice in this area is Grimes,” says Grant Hunter, Chief Creative Officer at London agency Iris. “In April 2023 she invited anyone to use her AI-cloned voice, with Grimes AI-1. She’d split the royalties 50-50.” He points to UMG’s catalogue-wide digital cloning of its artists and their voices, as well as companies such as Worldwide XR, which owns the digital rights to dead icons being cloned to speak again. Think James Dean, Bette Davis, Malcolm X.

Above: Grace Jones is training AI on her own voice for future collaborations.

“An artist’s voice, whether spoken, sung or written, is uniquely theirs,” says Hunter. “It’s our lived experience, our understanding of the human condition that provides the fuel for the stories we tell. It’s what makes people feel and connect with the work we put out there.” So, he asks, are artists literally losing their voices if they give away one of their most powerful assets? If anyone can control that instrument, then is that voice still uniquely theirs? And once they have released that voice to the world, have they lost control?

The danger is that bad actors can subvert those voices for their own ideological gain.

“The possibility of artists of today being able to collaborate with legends from the past is an intoxicating proposition,” adds Hunter. “Blurring the timeline and having artists co-create with the stars they were inspired by could create truly amazing new possibilities. But only if the collaborations are true to the integrity, intention and moral compass of the deceased star.”

That’s a very big IF. As he says: “The danger is that bad actors can subvert those voices for their own ideological gain.”

In the world of brand voice and voice-over, it’s only in the past few years that accents – regional, minority, multi-cultural – have gained traction and become more ubiquitous. “Good casting for regional accent voiceovers can do wonders for making a brand message authentic and believable,” says Charlie Ryder, Digital and Innovation Director at Elvis London. “Peter Kay for Warburton’s for example.”

Ryder sees AI spreading the regional word further and deeper into branding. “We can it to help increase the representation of regional and diverse accents, and utilise it to create multiple, varied voiceovers to localise adverts in a new way. When it comes to training AI voices, we can make a difference by using diverse data – regional accents, colloquial phrases and sayings – and mobilise AI as a way to better reflect the 98 per cent of the UK who don’t speak with an RP accent.”

Credits

powered by

- Agency WCRS/UK

-

- Director Declan Lowney

-

-

Unlock full credits and more with a Source + shots membership.

Credits

powered by

- Agency WCRS/UK

- Director Declan Lowney

- Editing Company Stitch

- Post Production Freefolk

- Sound Design Grand Central Recording Studios (GCRS)

- Agency Producer Helen Powlette

- Creative Gina Ramsden

- Creative Freya Harrison

- Creative Director Andy Lee

- Creative Director Jonny Porthouse

- Executive Creative Director Billy Faithfull

- Director of Photography Ray Coats

- Post Producer Cheryl Payne

- Editor Leo King

- VFX Supervisor Jason Watts

- Sound Designer Tom Pugh

- Sound Designer Ben Leeves

- Colourist Paul Harrison

- Producer Simon Monhemius

Credits

powered by

- Agency WCRS/UK

- Director Declan Lowney

- Editing Company Stitch

- Post Production Freefolk

- Sound Design Grand Central Recording Studios (GCRS)

- Agency Producer Helen Powlette

- Creative Gina Ramsden

- Creative Freya Harrison

- Creative Director Andy Lee

- Creative Director Jonny Porthouse

- Executive Creative Director Billy Faithfull

- Director of Photography Ray Coats

- Post Producer Cheryl Payne

- Editor Leo King

- VFX Supervisor Jason Watts

- Sound Designer Tom Pugh

- Sound Designer Ben Leeves

- Colourist Paul Harrison

- Producer Simon Monhemius

Peter Kay's voice was the best thing since sliced bread for Warburton's.

Sophie Stucke, Strategy Director at Mother Design, sees a powerful creative symbiosis arising from human creatives getting down with AI’s sonic mimicry. “I like the idea of it helping to democratise the music industry and break down traditional barriers in creative fields,” she says. “Tools like Suno make music creation accessible to everyone, the way camera phones democratised photography. But AI will never be able to replace the human-to-human music experience,” she adds. “The emotional connection between performer and audience gives music its deeper meaning and resonance – something AI cannot replicate.”

The future likely lies in human-AI symbiosis.

It’s how creatives interact and innovate with AI as a tool that excite her. “The future likely lies in human-AI symbiosis,” she says, “where AI augments rather than replaces human creativity. The key is striking a balance. AI can enhance creativity, but over-reliance risks stifling it. Ultimately, the goal is to harness its potential while maintaining the unique emotional depth and character that only human creatives can provide.”

For Owen Shearer, Sound Designer and Mixer at Sonic Union in New York, Suno and co are nowhere near being able to replace human composers. “People are actually very attuned to what makes AI-created work feel ‘off’,” he says. “We are humans sonically connecting with other humans, after all. There are generative AI products that can synthesise new sounds based on text prompts. For the time being their output is too low-quality to be useful, but this could soon result in some compelling idea-generating capabilities – especially when considering how those might embed branded language into a sonic element.”

We used AI to create a new digital instrument for Singapore Airlines, translating the 14 native flowers of Singapore into sound.

One example of a singular AI-human symbiosis comes from London’s sonic branding agency DLMDD. Its Senior Brand Music Consultant, Erin McCullough says: “We used AI to create a new digital instrument for Singapore Airlines, translating the 14 native flowers of Singapore into sound. A symphonic composer then wrote the new sonic identity of Singapore Airlines from the instrument. Bringing together technology and human composition each brought out the best in the other.”

Above: DLMDD used AI to create a new sonic brand identity for Singapore Airlines.

And while it’s a great timesaver in assembling final deliverables – DLMDD may be distributing a 100 or more versions of a track – its impact on areas such as library music is less rosy. “Brands may start to go direct to AI-generated music solutions for time and cost savings,” admits McCullough, “especially if they just need a quick solution for below-the-line assets. But if we’re looking at ambitious brands who are passionate about music, or global TV campaigns, or TV or film, the role of the music supervisor won’t change that much.”

Brand music, sonic triggers and ‘ownable’ bespoke SFX palettes have become a vital part of the marketeers toolkit.

She’s clear on whether AI-generated music is as ‘good’ as the real thing. “I work day-in day-out in commissioning music and composers, and the AI-generated demos we’ve tested out sound worse and very obviously not real, even to non-musically trained ears. As it currently stands, the AI tools available do not hold a candle to real production."

"Brands come to us and they want to be authentic and human," adds McCullough, "they want to stand out, capture attention, to engage, so we have to ensure that, in this new world, the power of human creativity and the magic of music is never overlooked and undermined. The obvious gains are in productivity, time and cost savings. The risk, though, comes in if we assign the creative and strategic work itself to AI.”

Nothing can compare to the creativity and collaboration that comes from an in-person recording session.

Music is not only the food of love, but in branding is a secure shortcut to create understanding, add nuance, and depth to storytelling. At audio post studio Forever Audio, Global Head of Production Sam Dillon has seen a steep rise in sonic branding requests. “Brand music, sonic triggers and ‘ownable’ bespoke SFX palettes have become a vital part of the marketeers toolkit,” he says. But he doesn’t see a dominant role for AI – outside if being a valuable tool – when it comes to sound design. “It lacks not only the nuanced understanding and intuition of human sound designers, but also the ability to sculpt sounds in order to evoke the desired emotional response from audiences.”

He sees Covid having spurred the growth of remote voice-over recording sessions, but still believes that humans being together in a room makes for the best results. “Nothing can compare to the creativity and collaboration that comes from an in-person recording session,” he says. But will that hold when AI comes in to play as a voice-over tool – or as a replacement?

Grimes performing at Coachella 2024. Last year the singer invited anyone to use her AI-cloned voice Grimes AI-1.

“AI tools might cannibalise the primary income of VO talent,” agrees M&C Saatchi's Head of Generative AI, James Calvert. “It's a legitimate concern, but one that can be addressed.” Calvert uses tools such as text-to-voice maker ElevenLabs, and AI production studio AudioStack. “They promise scalability, speed, and consistent quality. AI does the heavy lifting and reduces dependency on a single voice. But we have to strike a balance where everyone wins. This means having upfront agreements on usage, fair compensation for talent but also opportunity to save on studio costs and precious time.”

Replicating iconic brand voices isn't a pipe dream... it's a technical reality.

Whether that balance will be met – perhaps only on a balance sheet in accounts – the tools AI can provide range from ‘the first empathic AI voice’ generated by Hume AI (“bringing a nuanced control over vocal utterances, the subtle ‘umms’ and intricate patterns of tune, rhythm, and timbre”) to instant translation, retaining the original’s style and tone, into multiple languages, and the raising of the dead when it comes to iconic brand voices. “Replicating iconic brand voices isn't a pipe dream,” he says, “it's a technical reality. AI allows us to maintain the integrity and recognisability of a voice, while scaling it across more spaces.”

Except the legal one – the licensing and usage rights. The whole issue around the ownership of human traits replicated perfectly, is an unregulated frontier outpost on the cutting edge of a socio-cultural Wild West. “What a time to be in the creative industries!” exclaims Calvert. “AI is revolutionising how things get done, but AI is neither magic nor magician; it’s a solution seeking problems.”

What we make of those problems, and the solutions that AI can deliver, may come to define and reshape what it is to be creative, and even what it is to be human. Keep your eyes tuned and your eyes peeled, and happy prompting.

+ membership

+ membership